Overview

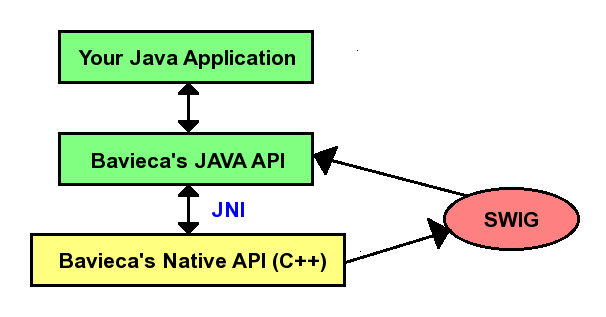

Bavieca's Java API (Application Programming Interface) provides an easy way to incorporate speech-recognition capabilities into a Java application. This API is nothing but a wrapper of the native Bavieca's API.. This wrapper is automatically generated using SWIG and uses JNI (Java Native Interface) to enable the communication between Java and the native C++ Bavieca library. The figure below illustrates the interaction between the user application, the Java library and the C++ native library.

Since the Java API is nothing but a wrapper of the Bavieca native library, it exposes an equivalent interface and presents an identical set of features.

- Stream-based feature extraction: speech features can be extracted from samples of audio as the audio becomes available and fed into the feature extraction process. Feature normalization is done in stream mode and feature vectors (instead of audio samples) are used as input to the various speech processing functions (recognition, speech detection, alignment, etc). The same set of features can be first used for speech activity detection and then for recognition.

- Stream-based speech recognition: while Bavieca's command line speech recognizers

(

dynamicdecoderandwfsadecoder) enable speech recognition in batch mode, which is ideal for experimentation, they do not provide the means to perform speech recognition over a live stream of audio. Bavieca's API enables speech recognition in live mode. - Stream-based speech activity detection: speech activity detection is a very common mechanism to reduce the amount of audio fed to the speech recognition system in order to make a better use of computational resources and reduce recognition errors.

- Speech-to-text alignment: time-alignment information of words and phonemes within an utterance can be generated to be used, for example, to highlight words and phonemes while playing back speech, or as input data to perform synthetic lip movement.

- Live-mode MLLR speaker adaptation: Coming soon...

Bavieca's Java API is contained in a Java class (BaviecaAPI), which comprises a relatively reduced set of methods, all of them are defined in the file BaviecaAPI.java. Additionally, there are about a dozen helper classes that encapsulate recognition hypotheses, alignments, etc.

Prerequisites

- Java. Install the Java Development Kit (JDK), which includes support for the Java Native Interface (JNI),

and modify the PATH environment variable so it points where the binaries for the

javaandjavactools are. For example for Open JDK this path will be something like/usr/lib/jvm/java-1.6.0-openjdk-1.6.0.0/bin - SWIG (Simplified Wrapper and Interface Generator). This tool is used to connect Bavieca's C++ API with

the Java language. Instructions on how to download and install SWIG can be found in

www.swig.org

Install

In order to successfully build Bavieca's Java API follow the steps below. These steps assume that a successful installation of Java and Swig is alredy in place (see prerequisites).

- Generate the C++ wrapper using SWIG. Navigate to "

<bavieca_base_dir>/java" and type ">build.sh". This script uses SWIG to generate the C++ wrapper to the native C++ library, and a package of Java classes (blt.bavieca) in the form of a jar file (baviecaAPI.jar). These Java classes form Bavieca's Java API and are, along with the shared native library that will be generated in the following steps, all a Java application needs to have access to Bavieca's speech recognition capabilities. - Set the include directory for JNI in the makefile. Open the makefile definitions file in

"

<bavieca_base_dir>/src/Makefile.defines" and modify the variablesJAVA_BASEandINCS_DIR_JNIto point to the directories in the JDK installation where the headers "jni.h" and "jni_md.h" are. These headers are needed to compile the C++ wrapper that serves as bridge between Java and the native C++ library. - Compile and build the Bavieca ASR Toolkit with Java support. Navigate to

"

<bavieca_base_dir>/java" and type ">make java-api". This will build Bavieca's native library along with the C++ wrapper generated by SWIG in the first step. This shared library (see "<bavieca_base_dir>/lib"), along with the jar containing the Java API that was created in the first step, are all a Java application needs to do speech recognition with Bavieca. - Run the examples. Navigate to "

<bavieca_base_dir>/java" and type ">examples.sh" in order to compile and execute the examples provided. These examples show how to perform recognition and alignment of audio using the Java API.

Example 1: speech recognition (exampleDecode.java)

The example below shows how to use the Java API to recognize simulated live audio. Audio is read from a file and fed to the recognizer in small chunks as if it was continuously captured from the microphone. Check the repository for an up-to-date version of this example.

/*---------------------------------------------------------------------------------------------*

* Copyright (C) 2012 Daniel Bolaños - www.bltek.com - Boulder Language Technologies *

* *

* www.bavieca.org is the website of the Bavieca Speech Recognition Toolkit *

* *

* Licensed under the Apache License, Version 2.0 (the "License"); *

* you may not use this file except in compliance with the License. *

* You may obtain a copy of the License at *

* *

* http://www.apache.org/licenses/LICENSE-2.0 *

* *

* Unless required by applicable law or agreed to in writing, software *

* distributed under the License is distributed on an "AS IS" BASIS, *

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. *

* See the License for the specific language governing permissions and *

* limitations under the License. *

*---------------------------------------------------------------------------------------------*/

import java.lang.*;

import java.io.*;

import java.nio.ByteBuffer;

import java.nio.ShortBuffer;

import java.nio.ByteOrder;

import blt.bavieca.*;

public class exampleDecode {

static {

try {

System.loadLibrary("baviecaapijni");

} catch (UnsatisfiedLinkError e) {

System.err.println("Native code library failed to load.\n" + e);

System.exit(1);

}

}

public static void main(String argv[]) {

// check parameters

if (argv.length != 2) {

System.out.println("usage: exampleDecode [configurationFile] [rawAudioFile]");

return;

}

System.out.println("[java] - example begins --------------");

blt.bavieca.BaviecaAPI baviecaAPI = new blt.bavieca.BaviecaAPI(argv[0]);

// INIT_SAD, INIT_ALIGNER, INIT_DECODER, INIT_ADAPTATION

if (baviecaAPI.initialize((short)BaviecaAPI_SWIGConstants.INIT_DECODER) == true) {

System.out.println("[java] library initialized successfully");

}

String strFileRaw = argv[1];

FileInputStream fis;

try {

// open the raw audio file (16KHz 16bits)

fis = new FileInputStream(strFileRaw);

// signal the beginning of an utterance

baviecaAPI.decBeginUtterance();

// simulate live audio (chunks of audio are read from a file and processed one by one)

int iBytesChunk = 3200; // one 10th of a second

byte [] bytesChunk = new byte[iBytesChunk];

short [] sSamplesChunk = new short[iBytesChunk/2];

int iBytesRead = 0;

int iAudioProcessed = 0;

do {

iBytesRead = fis.read(bytesChunk);

if (iBytesRead == 0) {

break;

}

// turn bytes into shorts (little endian)

ByteBuffer.wrap(bytesChunk).order(ByteOrder.LITTLE_ENDIAN).asShortBuffer().get(sSamplesChunk);

long lFeatures [] = new long[1];

float [] fFeatures = baviecaAPI.extractFeatures(sSamplesChunk,(long)iBytesChunk/2,lFeatures);

iAudioProcessed += bytesChunk.length;

System.out.println("[java] audio processed: " + ((float)iAudioProcessed)/32000.0 + " seconds");

baviecaAPI.decProcess(fFeatures,lFeatures[0]);

} while(iBytesRead == iBytesChunk);

System.out.println("[java] audio processed: " + ((float)iAudioProcessed)/32000.0 + " seconds");

// get the hypothesis

HypothesisI hypothesis = baviecaAPI.decGetHypothesis();

if (hypothesis != null) {

System.out.println("[java] hypothesis contains " + hypothesis.size() + " words");

for(int i=0 ; i < hypothesis.size() ; ++i) {

WordHypothesisI wordHypothesisI = hypothesis.getWordHypothesis(i);

System.out.format("[java] hyp: (%5d) %-12s (%5d)%n",wordHypothesisI.getFrameStart(),

wordHypothesisI.getWord(),wordHypothesisI.getFrameEnd());

}

hypothesis.delete();

} else {

System.out.println("[java] null hypothesis!");

}

// signal the end of utterance

baviecaAPI.decEndUtterance();

// close the file

fis.close();

} catch (EOFException eof) {

System.out.println("EOF reached");

} catch (IOException ioe) {

System.out.println("IO error: " + ioe);

}

// uninitialization

baviecaAPI.uninitialize();

baviecaAPI.delete();

System.out.println("[java] library uninitialized successfully");

System.out.println("[java] - example ends ----------------");

}

}

Example 2: speech alignment (exampleAlign.java)

The example below shows how to align text to audio in order to get time-alignment information for each word and phoneme. This information can be used for different purposes, such as highlighting words as they are played back, identify phone boundaries, or as input data to produce visual speech. Check the repository for an up-to-date version of this example.

/*---------------------------------------------------------------------------------------------*

* Copyright (C) 2012 Daniel Bolaños - www.bltek.com - Boulder Language Technologies *

* *

* www.bavieca.org is the website of the Bavieca Speech Recognition Toolkit *

* *

* Licensed under the Apache License, Version 2.0 (the "License"); *

* you may not use this file except in compliance with the License. *

* You may obtain a copy of the License at *

* *

* http://www.apache.org/licenses/LICENSE-2.0 *

* *

* Unless required by applicable law or agreed to in writing, software *

* distributed under the License is distributed on an "AS IS" BASIS, *

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. *

* See the License for the specific language governing permissions and *

* limitations under the License. *

*---------------------------------------------------------------------------------------------*/

import java.lang.*;

import java.io.*;

import java.nio.ByteBuffer;

import java.nio.ShortBuffer;

import java.nio.ByteOrder;

import blt.bavieca.*;

public class exampleAlign {

static {

try {

System.loadLibrary("baviecaapijni");

} catch (UnsatisfiedLinkError e) {

System.err.println("Native code library failed to load.\n" + e);

System.exit(1);

}

}

public static void main(String argv[]) {

// check parameters

if (argv.length != 3) {

System.out.println("usage: exampleAlign [configurationFile] [rawAudioFile] [txtTranscriptionFile]");

return;

}

System.out.println("[java] - example begins --------------");

blt.bavieca.BaviecaAPI baviecaAPI = new blt.bavieca.BaviecaAPI(argv[0]);

// INIT_SAD, INIT_ALIGNER, INIT_DECODER, INIT_ADAPTATION

if (baviecaAPI.initialize((short)BaviecaAPI_SWIGConstants.INIT_ALIGNER) == true) {

System.out.println("[java] library initialized successfully");

}

try {

FileInputStream fis;

// open the raw audio file (16KHz 16bits)

String strFileRaw = argv[1];

fis = new FileInputStream(strFileRaw);

int iBytes = fis.available();

byte [] bytes = new byte[iBytes];

// read bytes of audio

if (fis.read(bytes) != iBytes) {

System.out.println("Error: unable to read from file");

return;

}

System.out.println("[java] audio read: " + ((float)bytes.length)/32000.0 + " seconds");

// turn bytes into shorts (little endian)

short [] sSamples = new short[iBytes/2];

ByteBuffer.wrap(bytes).order(ByteOrder.LITTLE_ENDIAN).asShortBuffer().get(sSamples);

// extract features

long lFeatures [] = new long[1];

float [] fFeatures = baviecaAPI.extractFeatures(sSamples,(long)sSamples.length,lFeatures);

System.out.println("[java] features extracted: " + lFeatures[0]);

// forced alignment (Viterbi alignment)

BufferedReader br = new BufferedReader(new FileReader(argv[2]));

StringBuffer str = new StringBuffer();

String line = br.readLine();

while (line != null) {

str.append(line);

str.append("\n");

line = br.readLine();

}

String strWords = str.toString();

AlignmentI alignment = baviecaAPI.align(fFeatures,lFeatures[0],strWords,false);

if (alignment != null) {

for(int i=0 ; i < alignment.size() ; ++i) {

WordAlignmentI wordAlignment = alignment.getWordAlignment(i);

System.out.format("[java] word: (%5d) %-12s (%5d)%n",wordAlignment.getFrameStart(),wordAlignment.getWord(),wordAlignment.getFrameEnd());

}

} else {

System.out.println("[java] alignment is null");

}

alignment.delete();

// close the file

fis.close();

} catch (EOFException eof) {

System.out.println("EOF reached");

} catch (IOException ioe) {

System.out.println("IO error: " + ioe);

}

// uninitialization

baviecaAPI.uninitialize();

baviecaAPI.delete();

System.out.println("[java] library uninitialized successfully");

System.out.println("[java] - example ends ----------------");

}

}